人脸检测

本文主要给大家介绍如何在Android平台下使用角蜂鸟调用SSD-Mobilenet卷积神经网络,实现人脸检测

准备工作

- 配置环境等详情请参照Hello 2018里的快速开始,此处不具体阐述。

- 下载人脸检测所需模型graph_face_SSD文件,在您Android Studio中,当前module下新建assets包,将下载的模型文件复制到该目录下。

- 因工程需要处理图像,所以使用了javacv库,可从GitHub自行下载或点击链接从示例工程中拷贝到自己工程下。

具体实现

1.实现具体步骤:

开启设备

int status = openDevice(); if (status != ConnectStatus.HS_OK) { return; }将graph文件传输到角蜂鸟里

int id = allocateGraphByAssets("graph_face_SSD");如果单模型,id即为0,不需要维护此属性。

处理图像数据,分俩种模式

使用角蜂鸟内置摄像头:

byte[] bytes = getImage(0.007843f, 1.0f,id);使用外部图像来源:该模式下,传入角蜂鸟的数据要经过预处理,下面介绍的例子是使用手机摄像头的数据,图像大小为1280*720:

SoftReference<Bitmap> softRef = new SoftReference<>(Bitmap.createBitmap(1280, 720, Bitmap.Config.ARGB_8888)); Bitmap bitmap = softRef.get(); allocations[0].copyTo(bitmap); Matrix matrix = new Matrix(); matrix.postScale(300f / 1280, 300f / 720); Bitmap newbm = Bitmap.createBitmap(bitmap, 0, 0, 1280, 720, matrix,true); int[] ints = new int[300 * 300]; newbm.getPixels(ints, 0, 300, 0, 0, 300, 300); float[] float_tensor = new float[300 * 300 * 3]; for (int j = 0; j < 300 * 300; j++) { float_tensor[j * 3] = Color.red(ints[j]) * 0.007843f - 1; float_tensor[j * 3 + 1] = Color.green(ints[j]) * 0.007843f - 1; float_tensor[j * 3 + 2] = Color.blue(ints[j]) * 0.007843f - 1; } int status_load = mFaceDetectorBySelfThread.loadTensor(float_tensor, float_tensor.length,id);

获取返回的处理结果,该mobilenetssd类型的神经网络,返回的结果解析如下:

float[] result = getResult(id); int num = (int) floats[0]; for (int i = 0; i < num; i++) { int x1 = (int) (floats[7 * (i + 1) + 3] * screenwidth); int y1 = (int) (floats[7 * (i + 1) + 4] * screenheight); int x2 = (int) (floats[7 * (i + 1) + 5] * screenwidth); int y2 = (int) (floats[7 * (i + 1) + 6] * screenheight); int wight = x2 - x1; int height = y2 - y1; int percentage = (int) (floats[7 * (i + 1) + 2] * 100); if (percentage <= 55) { continue; } if (wight >= screenwidth * 0.8 || height >= screenheight * 0.8) { continue; } if (x1 < 0 || x2 < 0 || y1 < 0 || y2 < 0 || wight < 0 || height < 0) { continue; } }结果通过handler机制传给当前Activity,HornedSungemFrame用来接收图像和结果的实体类,DrawView负责将人脸的检测框画在屏幕上,具体代码可参考示例工程

2.注意事项:

处理image图像区别:采用角蜂鸟内置的摄像头模式下,如果zoom为ture,获取的图像是640*360的BGR图像,如果zoom为false,获取的图像为1920*1080的图像,图像的排序需要注意,1080P的是BGR每个通道的数据传完才会传下个通道的。具体处理示例代码如下:

opencv_core.IplImage bgrImage = null; if (zoom) { FRAME_W = 640; FRAME_H = 360; bgrImage = opencv_core.IplImage.create(FRAME_W, FRAME_H, opencv_core.IPL_DEPTH_8U, 3); bgrImage.getByteBuffer().put(bytes); } else { FRAME_W = 1920; FRAME_H = 1080; byte[] bytes_rgb = new byte[FRAME_W * FRAME_H * 3]; for (int i = 0; i < FRAME_H * FRAME_W; i++) { bytes_rgb[i * 3 + 2] = bytes[i];//r bytes_rgb[i * 3 + 1] = bytes[FRAME_W * FRAME_H + i];//g bytes_rgb[i * 3] = bytes[FRAME_W * FRAME_H * 2 + i];//b } bgrImage = opencv_core.IplImage.create(FRAME_W, FRAME_H, opencv_core.IPL_DEPTH_8U, 3); bgrImage.getByteBuffer().put(bytes_rgb); } opencv_core.IplImage image = opencv_core.IplImage.create(FRAME_W, FRAME_H, opencv_core.IPL_DEPTH_8U, 4); cvCvtColor(bgrImage, image, CV_BGR2RGBA);处理getResult返回值:

- 每7个数为一组数据

- 数组的第一组数的第一个数表示检测到多少个人脸,剩下的6个数不作处理

- 每组数据的第三个数是置信度,剩下的4个数分别为上下左右的坐标

- 值类型都为float32

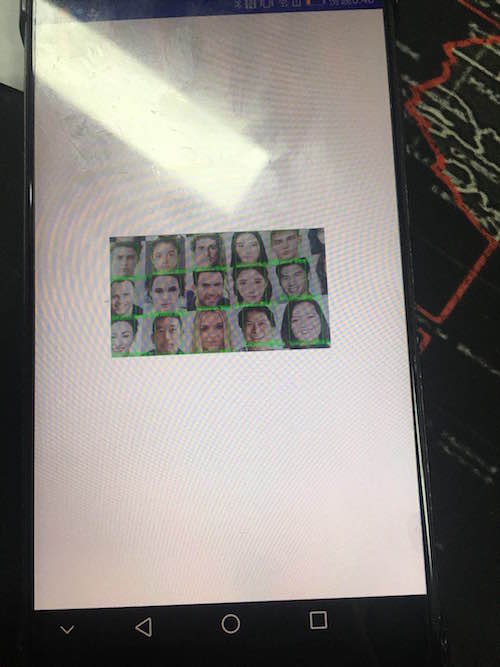

3.效果展示:

友好提醒:因Android设备基本都是USB2.0,所以不建议使用1080P的图像,传输比较耗时,会有卡顿感,可以使用360P的图像,铺满屏幕即可

具体代码可去GitHub下载,地址如下 SungemSDK-AndroidExamples