Image Recogniser (Low-level API Tutorial)

SqueezeNet 1000 Classes Image Classifier

This chapter introduces how to build an image classifier by using low-level API only.

- Tested on Ubuntu 16.04

Path and files

- Python:SungemSDK-Python/examples/apps/ImageRecognition/ImageRecognition.py

- Model file:SungemSDK-Python/examples/graphs/graph_sz

Image Recognition

run image recogniser with the following command under ImageRecognition directory

~/SungemSDK/examples/apps/ImageRecognition$ python3 ImageRecognition.py

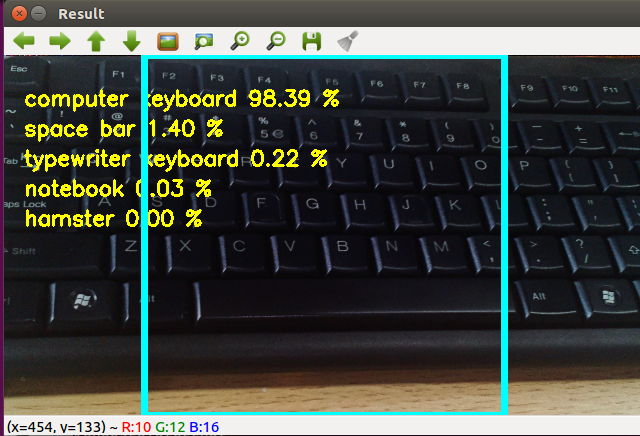

The region-of-interest is highlighted with a cyan bounding box. You can adjust the size by pressing ‘w’ and ’s’.

Get Top5 classification result, as shown in the example.

No message displayed on the console as we are using low-level API

Parameter setting

Initialisation

# Load device

devices = hs.EnumerateDevices()

dev = hs.Device(devices[0])

dev.OpenDevice()

# Load CNN model

with open('../../graphs/graph_sz', mode='rb') as f:

b = f.read()

graph = dev.AllocateGraph(b)

dim = (227,227)

# Load class labels

classes=np.loadtxt('../../misc/image_category.txt',str,delimiter='\t')

# Set camera mode

if WEBCAM: video_capture = cv2.VideoCapture(0)

Image classification

# Crop ROI

sz = image_raw.shape

cx = int(sz[0]/2)

cy = int(sz[1]/2)

ROI = int(sz[0]*ROI_ratio)

cropped = image_raw[cx-ROI:cx+ROI,cy-ROI:cy+ROI,:]

# Preprocessing

cropped = cropped.astype(np.float32)

cropped[:,:,0] = (cropped[:,:,0] - 104)

cropped[:,:,1] = (cropped[:,:,1] - 117)

cropped[:,:,2] = (cropped[:,:,2] - 123)

# Load to HS device and sort result

graph.LoadTensor(cv2.resize(cropped,dim).astype(np.float16), 'user object')

output, userobj = graph.GetResult()

output_label = output.argsort()[::-1][:5]

Visualisation

# Display outcome

for i in range(5):

label = re.search("n[0-9]+\s([^,]+)", classes[output_label[i]]).groups(1)[0]

cv2.putText(image_raw, "%s %0.2f %%" % (label, output[output_label[i]]*100), (20, 50+i*30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 255), 2)

# Plot bounding box

cv2.rectangle(image_raw, (cy-ROI, cx-ROI), (cy+ROI, cx+ROI),(255,255,0), 5)

# Display result image

cv2.imshow('Result',image_raw)

# Detect keyboard press

key = cv2.waitKey(1)

if key == ord('w'):

ROI_ratio += 0.1

elif key == ord('s'):

ROI_ratio -= 0.1

if ROI_ratio < 0.1:

ROI_ratio = 0.1